Self-Learning AI: Concepts, Applications, and Future Prospects

This article explores Self-Learning AI, a transformative field where AI models continuously evolve through data interaction with minimal human oversight. We examine its foundational concepts, including reinforcement and self-supervised learning approaches that enable systems to adapt autonomously. Through case studies in healthcare, finance, manufacturing, and autonomous vehicles, we demonstrate how these technologies are already reshaping industries. Finally, we analyze the state-of-the-art Artificial Intelligence engineering prospects, revealing how algorithmic efficiency and human-AI collaboration will accelerate innovation across sectors. More intuitive, responsive, intelligent systems will complement human capabilities while addressing complex challenges previously considered intractable.

What is Self-Learning AI?

A self-learning AI acquires and updates knowledge independently and without explicit programming. Such an adaptive system improves functionality through trial and error. The progress in AI learning has been the following, historically:

- Traditional AI = pre-programmed, rule-based systems (static).

- Machine Learning learns and adjusts based on data. It still needs retraining or intervention for new data or scenarios.

- Self-learning AI (SLAI) dynamically adjusts without explicit retraining, leveraging feedback and continuous learning.

Thus, the key advantages of Self-Learning Artificial Intelligence (SLAI) over traditional AI systems and traditional Machine Learning are its ability to generalize across different domains and improve autonomously, reducing the need for constant human assistance.

A self-learning system starts by interacting with its users or environment. Then, it observes the outcomes of its actions, using this feedback to refine its behavior. Reinforcement learning, where an agent learns by maximizing cumulative rewards, is often employed.

Learning has a mathematical foundation. The agent updates its knowledge based on the reward function, which assigns a value to each action in a given state. The goal is to optimize the expected long-term reward. The system refines its strategy by iteratively applying this update rule, much like a human learning from experience.

Artificial General Intelligence (AGI) represents the ultimate goal of SLAI. Unlike narrow AI, which is designed for specific tasks, AGI aims to perform tasks across multiple domains with humanlike adaptability.

By leveraging these learning mechanisms, SLAI moves beyond static rule-based approaches, evolving into adaptable systems that promise Artificial General Intelligence (AGI).

Neural Networks (NNs) are key enablers of SLAI. They consist of interconnected layers of nodes (neurons) that process data through weighted connections. Deep learning, a subset of NNs, leverages multiple layers (hence “deep”) to extract hierarchical features from raw inputs automatically. Through this structure, Artificial Intelligence can learn complex patterns, adjust its parameters, and improve its performance autonomously, enabling it to adapt without explicit supervision.

Hebbian learning states that “cells that fire together wire together.” In self-learning systems, the simultaneous activation of components strengthens connections, mimicking how experiences enhance cognitive links in the brain, allowing to improve through interactions and feedback.

Like neuroplasticity, SLAI modifies its internal structure during learning, increasing its precision. This adaptability helps it respond to environmental changes, like humans adjusting behaviors based on new experiences.

Like the human brain, SLAI needs mechanisms for storing and retrieving experiences. The brain uses long-term networks for this purpose. Similarly, SLAI retains valuable inputs and patterns.

The brain adjusts responses based on sensory input. SLAI also changes its behavior by processing feedback and updating internal models to perform optimally in changing environments.

The brain uses predictive models to foresee events. SLAI develops similar capabilities, enhancing decision-making and refining strategies based on historical data for future interactions.

Types of Self-Learning AI Systems

SLAI differs from traditional AI in that it continuously improves through interaction with input data rather than relying solely on large datasets and predefined rules. Unlike conventional machine learning, which often depends on static training sets, SLAI adjusts dynamically. As these learning models elaborate on more data, they refine their performance without requiring explicit human intervention. This adaptation enhances the training process, making SLAI more efficient and capable of uncovering complex patterns than traditional machine learning models.

Self-Supervised Learning

Self-supervised learning is a type of machine learning where the model learns from unlabeled training data by generating its own labels. It creates tasks, such as predicting missing parts of data (e.g., predicting the next word in a sentence), to build representations without needing explicit human annotations. This method is crucial for Artificial Intelligence development, as it allows learning models to hone themselves from vast amounts of unlabeled data, enhancing performance in natural language processing, computer vision, and other areas.

In self-supervised learning, a model generates its labels from raw inputs instead of relying on human annotations. It’s often used in NLP (e.g., transformers pre-training), speech recognition, and computer vision (e.g., contrastive learning).

The core idea of self-supervised learning is to define a surrogate task where the model can predict part of the data from another part, effectively learning meaningful representations without human intervention. The method usually involves two phases:

- In the pretext (Pretraining) Phase, the model learns useful representations from raw inputs by solving an artificially created assignment (e.g., predicting missing words in a sentence, image patch order, or masked pixels).

- In the downstream (Fine-Tuning) Phase, the learned representations are fine-tuned for actual functions like classification, object detection, or translation.

Unsupervised Self-Learning (Speculative)

Unsupervised learning in Artificial Intelligence involves models identifying patterns in raw inputs without labeled examples. Unlike supervised learning, which relies on predefined input-output pairs, unsupervised learning uncovers relationships independently. It is commonly applied in clustering, dimensionality reduction, and anomaly detection, enabling Artificial Intelligence to interpret vast unstructured datasets, though typically static.

Self-learning unsupervised learning improves autonomously over time. It refines its understanding through iterative learning cycles, adjusting its representation with new data. Unlike self-supervised learning, it evolves based on statistical properties and feedback mechanisms without predefined tasks. Techniques like deep clustering and contrastive learning exemplify this.

Deep clustering employs NNs for feature refinement and clustering, while contrastive learning helps Artificial Intelligence distinguish similarities and differences in data without labels, enhancing feature understanding. Some NNs adjust their learning through internal checks instead of external rewards. An unsupervised self-learning system would be invaluable for AI functioning without human input, such as autonomous robots, adaptable recommendation systems, and scientific discovery models analyzing large datasets.

Reinforcement Learning (Self-Learning)

Self-learning in Reinforcement learning (RL) emerges from its ability to improve independently, adjusting strategies based on experiences. Unlike standard machine learning models, which rely on static datasets, a reinforcement learning agent continuously updates its knowledge, making it one of AI's most dynamic and adaptable forms.

RL is an SLAI approach in which an agent interacts with an environment and learns by trial and error. Instead of relying on labeled data or predefined instructions, the system receives feedback in the form of rewards or penalties based on its actions.

Unlike traditional supervised learning, RL learning develops knowledge autonomously through exploration. The agent starts without prior understanding, taking random actions and refining its behavior through observed consequences.

Over time, it balances exploiting known strategies and exploring new possibilities to enhance future performance, guided by policies that determine actions and value functions estimating long-term expected rewards.

Advanced reinforcement learning models, such as AlphaGo and OpenAI’s Dota-playing agents, have demonstrated their ability to achieve superhuman performance in competitive environments with techniques such as deep RL.

Some Applications

Self-Learning Artificial Intelligence enables learning software to improve autonomously through experience. This boosts the speed of response and decision-making in healthcare, fraud detection in finance, autonomous navigation, and engineering design.

Healthcare

In healthcare, SLAI models analyze medical images and patient information to detect diseases such as cancer accurately. Algorithms continuously improve diagnostic precision by learning from vast datasets of medical scans and clinical reports. Artificial Intelligence can generate personalized treatment plans by predicting how patients respond to different therapies.

Finance

SLAI is critical in fraud detection in finance, as it identifies suspicious transactions and adjusts to evolving fraud patterns. Unlike rule-based criteria, SLAI models refine their ability to distinguish legitimate from fraudulent activities. AI enhances risk assessment by analyzing market trends, customer credit behavior, and economic indicators to provide dynamic risk evaluations.

Autonomous Vehicles

SLAI enables advanced navigation and control for autonomous vehicles. AI-powered driving improves decision-making through reinforcement learning, adapting to changing road conditions, pedestrian movements, and unpredictable events. These systems process real-time sensor data to optimize route planning, obstacle avoidance, and fuel efficiency.

Challenges in Implementation

SLAI faces several challenges that impact its efficiency and deployment. Data quality is critical as poor data can lead to inaccurate models. Many SLAIs, especially supervised ones, depend on labeled data, which is often scarce. Data preparation is labor-intensive, requiring cleaning and formatting, while continuous retraining is necessary to maintain accuracy but can be resource-intensive.

Integrating self-learning AI into existing workflows often requires significant upfront investment. Additionally, unpredictability can arise, particularly in RL systems operating without human oversight. Ensuring robustness and reliability remains challenging, as SLAI must handle dynamic and noisy real-world data.

Self-Learning AI in Engineering Design & Simulation

SLAI enables models to refine their knowledge continuously, reducing the need for engineers to retrain them from zero with new datasets. Self-learning models are strong enablers for AI adoption in industries by preventing engineers from starting from scratch with new data.

In regression problems, supervised learning algorithms identify relationships between variables to predict output values. Standard methods include linear regression, decision trees, and NNs.

SLAI minimizes R&D effort in verification and validation. Automating learning from prior experiments and engineering simulation reduces manual testing and model revalidation efforts in product development. Self-learning AI enables faster validation and verification cycles in engineering.

Neural Concept: AI-Powered Design Optimization

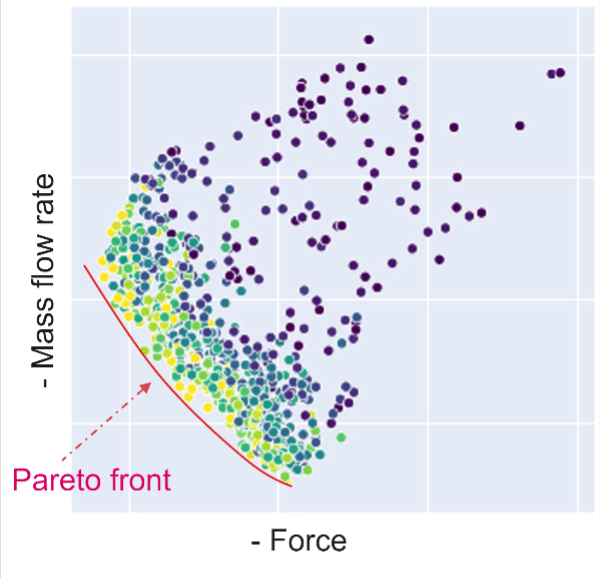

Neural Concept's 3D deep learning technology combines supervised learning with key self-learning principles explicitly applied to engineering problems. The Neural Concept platform uses past simulation data. Thus, it enables engineers to build on existing knowledge rather than starting each project from zero. This approach supports design optimization by allowing the AI to make predictions based on previously analyzed designs and simulation results.

The geometric deep learning framework processes 3D design data to generate rapid performance predictions, reducing the computational burden of traditional simulation methods. Engineers can evaluate multiple design variations quickly using these predictions instead of running time-intensive simulations for each iteration. This efficiency translates into tangible benefits: shorter simulation times, decreased physical prototyping needs, and more focused R&D resource allocation.

While the Neural Concept platform improves its prediction accuracy over time as it processes more data, it functions within a supervised learning paradigm that requires engineering expertise to guide the learning process and validate results.

The prediction capabilities of such a system include static and explicit structure, electromagnetism, and fluid dynamics.

Future Prospects

SLAI will make industries more efficient, widely integrated, and increasingly collaborative with humans. As algorithms advance, Artificial Intelligence will require less data to learn, expand into new sectors, and enhance our decisions rather than replace them. Future models will improve learning efficiency, reducing dependence on vast datasets. Transfer, few-shot, and self-supervised learning enable AI to generalize better, accelerating their adaptation capabilities.

Conclusion

Self-Learning AI represents the next step in Artificial Intelligence, moving beyond static models toward systems that continuously improve through interaction with data and environments. From healthcare diagnostics to financial fraud detection and autonomous vehicles, SLAI is transforming industries by reducing human intervention while increasing adaptability. Despite challenges in data quality, integration, and ethical considerations, the trajectory is clear. Future AI systems will learn more efficiently from less data, expand into new domains, and increasingly complement human expertise rather than replace it. As these technologies mature, the collaboration between human creativity and machine learning capabilities will unlock innovations across all sectors. By automating repetitive chores and providing real-time insights, humans will focus more on creativity, strategies, and problem-solving.

FAQ

How can an AI train itself without explicit feedback?

SLAI can learn from data without explicit labeled feedback. It uses techniques like clustering, anomaly detection, or RL (sometimes), where the system improves based on the environment’s responses without needing manually labeled data. Clustering in unsupervised learning involves grouping unlabeled data based on shared characteristics or attributes.

How does self-learning AI differ from traditional Machine Learning models?

Traditional machine learning relies on labeled datasets for training and requires explicit supervision. SLAI autonomously explores and adjusts to additional data, identifying relationships or optimal actions without manual intervention or labels.

Can SLAI models update themselves after deployment?

SLAI models can update themselves after deployment, often through continuous learning or adaptive algorithms. This allows them to improve over time based on data or interactions with their environment, making them dynamic and capable of evolving beyond initial training.

What are the ethical considerations surrounding Artificial Intelligence and Self-Learning?

Ethical concerns include:

• Bias: SLAI might perpetuate or amplify biases in data.

• Accountability: It can be hard to trace the decision-making process.

• Security: SLAI could be vulnerable to adversarial attacks or manipulation.

• Transparency: Ensuring SLAI remains understandable and controllable by humans is crucial.

How does Artificial Intelligence impact job roles in various industries?

SLAI can enhance productivity, automate complex tasks, and reduce the need for manual intervention, which might lead to job displacement in some roles. However, it can also create new opportunities for jobs in AI development, oversight, and integration, demanding a shift in skills and job responsibilities across industries.