Self Driving Car Machine Learning Fully Explained

Self-driving cars depend on machine learning, a core branch of artificial intelligence. These systems are trained on millions of annotated driving scenarios and operate without human input, enabling them to perceive the environment accurately. They interpret in real time the input data collected from onboard sensors, radar, LiDAR sensors, and camera feeds, and use it to build a constantly updating picture of the world around them.

Thanks to machine learning and advanced technologies, they learn to identify road signs, track nearby cars, detect pedestrians, and predict movement patterns by other road users. Machine learning is not rule-based logic. Instead, machine learning models generate probabilistic assessments of what is happening and what is likely to happen next.

Self-driving cars learn from data: terabytes of recordings that capture how people behave in complex, unpredictable traffic. Thanks to artificial intelligence and various sensors, autonomous vehicles observe how a car slows at an unmarked crossing, how a pedestrian hesitates, or how a cyclist weaves through traffic. These patterns are not hard-coded; they’re inferred and continuously updated through experience.

This article explains how machine learning enables perception, prediction, and decision-making in autonomous vehicles, as well as what is required to transition from raw data to a functioning self-driving policy.

What You Will Learn in This Article

- How is Machine Learning Applied to Self-Driving Cycles?

- Introduction to Autonomous Vehicles

- Examples of Machine Learning Applications in AVs

- Machine Learning Fundamentals

- Machine Learning Algorithms

- Current Trends in Autonomous System Development

- What are the Real-World Applications and Testing Environments for Self-Driving Cars

- What are the Challenges for Self-Driving Cars

- Safety and Ethical Considerations

- What are the Future Trends of Self-Driving Cars?

How is Machine Learning Applied to Self-Driving Cycles?

Machine learning is at the very heart of every major function in a self-driving car. Examples are understanding the environment to deciding how to act, executing commands, and learning from new data. Different models handle perception, prediction, planning, and control, often running in parallel.

The following sections explain how these systems work and how ML enables autonomous vehicles to operate safely and adapt over time.

1. Perception

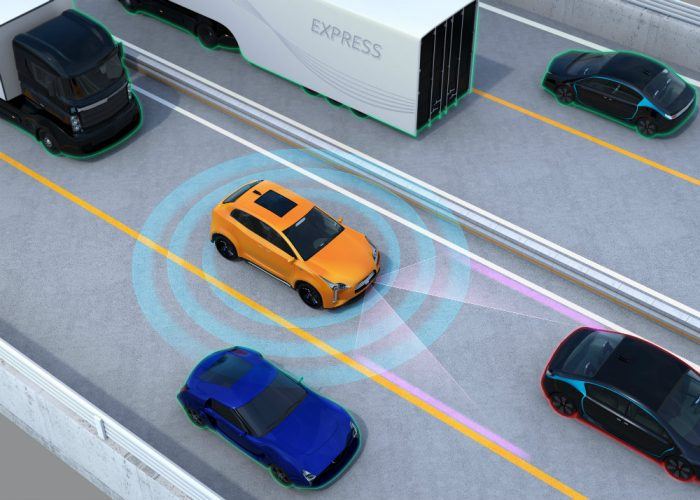

Perception systems allow autonomous vehicles to understand their environment by fusing data from multiple sensors and interpreting it through AI models. This section outlines how sensor fusion, object detection, and scene understanding work together to build a reliable real-time picture.

- Sensor Fusion:Inputs from cameras, LiDAR sensors, radar, and GPS are synchronized to create a consistent, real-time model of the car’s surroundings.

- The system synchronizes data streams from cameras, LiDAR, radar, GPS, and inertial sensors to build a unified, accurate model of the vehicle’s surroundings in real time.

- LiDAR uses laser pulses to generate dense 3D point clouds, capturing fine details like road edges, surface elevations, and nearby objects with high spatial resolution.

- Radar complements this by providing long-range detection and reliable performance in adverse weather—rain, fog, and snow—where optical sensors degrade.

- Inertial Measurement Units (IMUs) track vehicle dynamics by measuring acceleration, angular velocity, and orientation, supporting precise localization and motion estimation especially when GPS signals are weak or unavailable.

- Object Detection and Classification:

- Special neural networks (known as CNNs) process images and depth in the surrounding environment for object recognition: other vehicles, pedestrians, traffic signs, road edges, and lane markings, even in bad weather or partial occlusion.

- Scene Understanding:

- Machine Learning algorithms can learn to identify objects, relate them to each other, and the road structure. For example, algorithms identify who has the right of way, or whether a vehicle is parked or about to move.

2. Prediction & Planning

Once the environment is perceived, the autonomous system must decide how to act. This involves predicting the behavior of others on the road and planning a safe, legal, and efficient trajectory in real time.+

- Path Planning:

- The system calculates a safe and efficient route in real time. It accounts for road geometry, traffic rules, moving objects, and the car’s motion limits.

- Behavior Prediction:

- Neural networks predict the actions of other road users. For instance, they can predict whether a pedestrian will cross or a car will cut in over a short horizon of typically 3–5 s.

- Reinforcement Learning:

- RL is used in simulation to train driving behavior. Examples of behavior are merging, overtaking, or yielding. In RL, this is done by trial and error. Policies are tested offline for safety, of course, before being used in the real world.

3. Control

The control system translates decisions into physical actions. It ensures that the vehicle follows the planned path smoothly and safely, even under changing road conditions.

- Actuator Control:

- Algorithms convert planned paths into low-level commands. For example, how much to steer, accelerate, or brake. Algorithms adjust for traffic delay, road slope, and traction limits. Advanced control systems interpret sensor inputs and prediction data to adjust course headings in real time that human eyes and brain would elaborate slower. They have a crucial role in identifying roadblocks such as lane changing by other cars, lane closures, and key navigation markers to reroute or slow down as needed.

- End-to-End Learning:

- Some systems bypass traditional pipelines and they directly learn how to steer or brake from raw sensor data. These end-to-end learning systems are still in the experimental stage due to safety certification issues.

4. Learning and Improvement

Autonomous cars rely on machine learning to continuously improve their performance across perception, prediction, and control tasks. Learning doesn’t stop at initial deployment — fleets on the road are constantly gathering new data, which is then used to retrain or fine-tune models. To make this process scalable and efficient, different learning strategies are applied depending on the nature and availability of data.

- Supervised Learning:

- Labelled driving data is used to train models to detect objects, follow lanes, or classify road types. Human reviewers or automated annotation tools typically produce labels.

- Self-Supervised and Unsupervised Learning:

- These approaches help the system learn patterns from unlabelled driving data, e.g., understanding scene structure or driver behavior, reducing the need for manual input.

- Online Learning:

- Vehicles record difficult or novel situations while driving. These are reviewed and used to improve future model versions, after validation.

5. Machine Learning Techniques in Use

The core components of Deep Learning algorithms are:

- CNNs:

- Convolutional Neural Networks (CNNs) are specialized perception-oriented networks used for extracting features from camera and LiDAR data

- Transformers:

- Transformers are commonly used in predicting the future motion of other vehicles and pedestrians. For a broader perspective on how Transformers and other ML tools impact engineering workflows, see our article on Engineering Applications of Artificial Intelligence.

- Graph Neural Networks:

- GNNs model how road users interact at junctions or in traffic

- Reinforcement Learning:

- RL Optimizes vehicle behavior in complex situations like roundabouts or narrow roads

Check how deep learning applications are used in engineering design.

6. Key Challenges

Despite major advances, autonomous driving still faces technical and operational challenges. These issues affect reliability, safety certification, and deployment.

- Uncommon Situations:

- It remains challenging to predict and test for events such as flipped cars, fire trucks blocking lanes, or snow-covered signs.

- Model Verification:

- The safety of driverless cars relies on their good performance. However, it also involves raceability, explainability, and formal checks before deployment. For insights into how another safety-critical industry approaches these issues, read more about the Automation in Aerospace Industry.

- Hardware Constraints:

- All the above must run in under 100 ms on vehicle-grade chips, so models have to be compressed and optimized without losing accuracy.

Introduction to Autonomous Vehicles

Autonomous vehicles, also known as self-driving cars, have evolved from research prototypes in the 1980s to on-road pilot fleets in major cities by the 2020s.

Landmark projects, such as Carnegie Mellon’s Navlab and DARPA’s Grand Challenges, laid the foundation.

Today, companies such as Waymo, Tesla, Cruise, Zoox, and NVIDIA lead the field, alongside automakers like Mercedes-Benz and Hyundai, which are investing heavily in automation technologies. Self-driving cars work as taxis, offering an alternative to human driven vehicles in ride-hailing services, pilot programs, and limited urban deployments. While still constrained by regulations and geographic scope, these autonomous vehicles are steadily gaining traction as viable mobility solutions.

- Modern Autonomous vehicles rely on a fusion of sensors: cameras for visual cues, LiDAR for depth perception, radar for motion detection, and GPS for localization. Raw sensor data is interpreted by self-driving cars using machine learning models that detect objects and plan safe trajectories accordingly, in the same way as a human but with more computational power, more focus and less bias. These models are trained on millions of driving scenarios and refined through real-world testing and simulation.

- Self-driving technology integrates several advanced fields: computer vision for scene understanding, neural networks for pattern recognition, and reinforcement learning for decision-making under uncertainty. The systems operate under tight constraints:

- Navigating traffic

- Reacting to unpredictable human behavior

- Adapting to complex environments.

- The benefits of self-driving cars include reducing collisions, easing urban congestion, and enhancing mobility for individuals who are unable to drive.

Examples of Machine Learning Applications in AVs

Machine learning enables self-driving cars to handle complex, uncertain environments by learning patterns from data. Below are key applications where different ML models enhance perception, prediction, and decision-making.

- Lane Detection:

- Convolutional neural networks accurately identify road boundaries, markings, and lane types across a range of lighting and weather conditions.

- Pedestrian Intention Prediction:

- Sequence models estimate if a person is about to cross the road based on pose and motion.

- Traffic Light Recognition:

- Visual models classify signal states at various angles and distances, even when the signal is occluded or misaligned.

- Dynamic Path Planning:

- Algorithms continuously recalculate optimal paths based on the movement of vehicles, temporary blockages, and safety margins.

- Anomaly Detection:

- Outlier events, such as debris or stalled vehicles, are flagged in real-time to trigger cautionary maneuvers or fallback behaviors.

Machine Learning Fundamentals

Machine Learning (ML) is a method that enables computers to learn patterns from data, rather than being explicitly programmed with step-by-step instructions. A model is trained by showing it many examples (called training data) along with the correct outputs.

By minimizing the difference between predicted and actual outputs, the model adjusts its internal parameters to improve accuracy.

Once trained, the model can generalize to new, unseen input data.

There are various types of Machine Learning algorithms. Still, most applications in self-driving cars rely on machine learning algorithms and supervised learning for perception and behavior prediction, while reinforcement learning is playing a growing role in decision-making under uncertainty.

Let’s break down how these ML principles and route planning are applied in the development of self-driving vehicles:

- Autonomous vehicles rely on machine learning not just to automate driving tasks, but to learn how to drive by extracting patterns from large-scale data. Instead of programming each rule explicitly, engineers train models that generalize from recorded driving behavior, sensor inputs, and simulated cases.

- Visual perception is facilitated through deep learning, particularly convolutional neural networks, which analyze sensor images to identify road elements such as pedestrians, vehicles, traffic lights, or lanes. These models are trained to function across weather conditions, lighting changes, and partial occlusions.

- To drive safely, the vehicle also needs to anticipate how others will move. Prediction models (often based on attention mechanisms) forecast short-term trajectories of surrounding agents. These forecasts inform planning modules, which generate feasible paths that adhere to traffic rules, road geometry, and the car’s dynamic limits. The selected trajectory is then executed by control systems that translate planned motion into precise steering, acceleration, and braking commands.

- Learning doesn’t stop after deployment. Fleets continuously collect data from unusual scenarios. These are reviewed and used to retrain models, helping vehicles improve over time without relying solely on fixed software updates. A constant learning loop is what keeps the system aligned with the real-world complexity of driving.

Explore the usage of AI in automotive product development.

Machine Learning Algorithms

Neural networks represent a shift from rule-based programming to data-driven learning.

Instead of hardcoding behavior, these Artificial Intelligence models are trained to extract meaning from raw sensor input data, including images, radio waves, radar, LiDAR, and GPS, and convert it into decisions.

This allows vehicles to adapt to complex, changing environments without needing explicit instructions for every situation.

Neural networks are utilized in self-driving cars to analyze data from various sensors and make informed driving decisions, enabling the vehicle to perceive its environment effectively.

Various types of Artificial Intelligence learning are implemented. Let’s look at how different types are applied within self-driving systems:

- Supervised learning is used to teach the system how to detect objects and understand signs and lanes by learning from labeled examples.

- Unsupervised learning helps uncover patterns in unlabeled data, such as clustering similar traffic scenes or identifying unusual behaviors.

- Reinforcement learning is used in simulation to develop strategies for complex maneuvers receiving feedback based on the safety and smoothness of its actions.

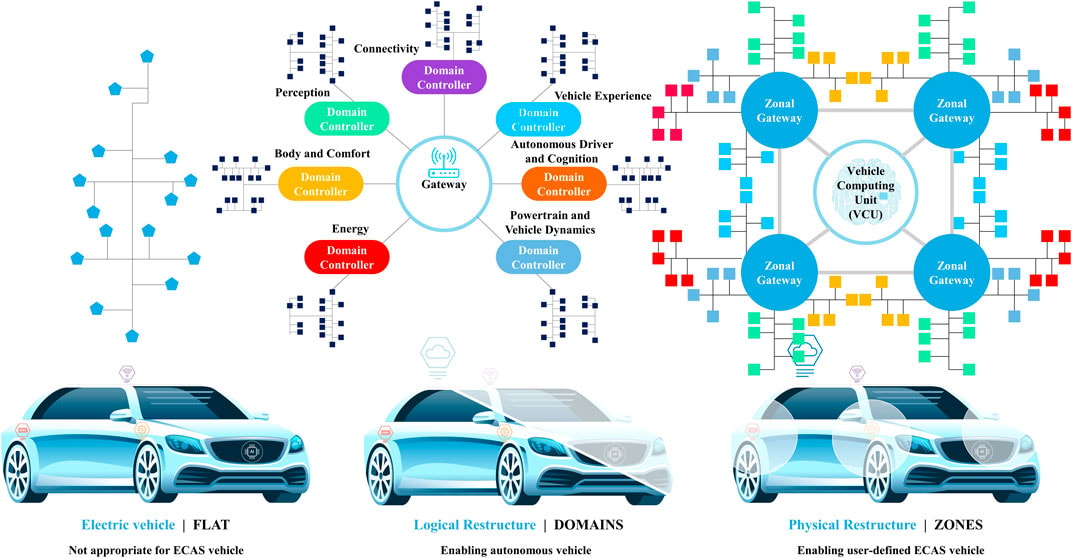

Current Trends in Autonomous System Development

The development of autonomous vehicles is accelerating, backed by focused R&D and system-level integration. Automotive companies are building next-generation platforms that combine reliable hardware with scalable AI models. Explore how AI is used in automotive industry.

Machine learning plays a central role in how a car sees its surroundings, including other cars, contributing to greater accuracy in object classification. Deep learning networks trained on driving data help the vehicle classify objects, track movement, and respond to unpredictable changes on the road. This is self-learning AI for autonomous systems.

Different sensors such as Lidar and radar are taking center stage in perception stacks. Their ability to operate effectively in poor visibility conditions makes them essential for maintaining awareness and ensuring safety, as well as accurately capturing a variable environment.

Efforts in the development of self-driving cars are now shaped by practical demands: reducing delays in traffic flow, preventing collisions, and improving control precision under realistic constraints. Engineers focus on fast perception-to-action loops and safe fallback strategies.

These trends are not just technical upgrades. They mark a shift toward autonomous systems that perform reliably, operate independently, and meet functional safety requirements without human intervention. discussed earlier

What are the Real-World Applications and Testing Environments for Self-Driving Cars?

Self-driving car development requires extensive testing across both simulated and real environments. The following sections describe where and how self-driving car systems are trained, validated, and deployed, from controlled tracks and digital twins to public road trials and niche commercial deployments.

Closed-Circuit and Controlled Testing Grounds

Before autonomous vehicles reach public roads, they undergo thousands of hours of testing in controlled environments.

Facilities like Mcity (University of Michigan) and GoMentum Station (California) are purpose-built test tracks designed to replicate realistic driving scenarios, complete with intersections, crosswalks, lights, highway segments, and even faux construction zones.

These grounds enable OEMs and tech companies to safely test edge cases, i.e., scenarios that are rare but critical for safety, such as pedestrians suddenly jaywalking, aggressive merging, or a changing lane cyclist.

Engineers can repeat these tests precisely and modify variables like weather, lighting, or timing, which is impossible on public roads.

This approach accelerates debugging and helps validate system behavior under stress, thereby reducing the risk of human error.

Complementing physical testing, Hardware-in-the-Loop (HIL) setups are used to validate individual components, such as braking controllers or radar units, by simulating real sensor inputs and vehicle responses. This allows engineers to evaluate how hardware and software interact before ever installing them in a complete car, reducing risk and catching integration issues early in development.

Controlled testing grounds and HIL systems together form the backbone of safe, staged deployment for autonomous driving technologies.

Public Road Testing and Regulatory Frameworks

Testing self-driving cars on public roads is the most visible (and the most scrutinized) phase in autonomous vehicle development.

Companies like Waymo and Cruise operate fleets in complex urban environments, such as San Francisco and Phoenix, gathering critical data collected from real-world traffic, pedestrians, cyclists, and unpredictable weather conditions.

These trials are structured experiments under close supervision.

Human-in-the-Loop

In most jurisdictions, public testing requires either a safety driver behind the wheel or remote oversight systems capable of intervening in the event of a failure.

This human-in-the-loop approach is not just a precaution; it’s often a legal requirement that balances innovation with public safety.

Global and Local Realities

But the pace of deployment varies widely.

- While US states like California and Arizona have created relatively straightforward paths for testing and rollout, other regions are more cautious or fragmented.

- In the EU, regulatory approval is slower and more centralized

- In China, rules are evolving but are heavily influenced by national industrial policy.

This patchwork of laws and risk tolerance defines not only where companies test, but also how quickly they can transition from supervised trials to commercial autonomy.

Simulation Platforms and Digital Twins

Testing autonomous vehicles in the real world is costly, slow, and limited by safety constraints.

- Simulation platforms like CARLA, LGSVL, and NVIDIA DRIVE Sim provide realistic virtual environments where self-driving systems can be tested across millions of diverse scenarios, including scenarios that pose safety risks such as low visibility, sensor malfunctions, jaywalking pedestrians, and multi-agent interactions.

- These platforms support full-stack validation, from raw sensor emulation to decision-making and control. High-fidelity simulations replicate vehicle dynamics, weather effects, lighting conditions, and complex traffic behavior. Digital twins of real cities allow developers to reproduce edge cases and fine-tune system responses.

- Simulation also generates synthetic data used to train machine learning models, particularly for perception tasks. Synthetic data helps cover rare or hazardous situations (such as emergency vehicles in reverse or flooding) more efficiently and at a lower cost.

- Used in continuous integration pipelines, these tools accelerate development, improve safety validation, and reduce dependency on manually labeled realistic driving footage.

Niche Deployments in Semi-Structured Environments

Full autonomy on public roads remains a complex and challenging task.

In the meantime, semi-structured environments with limited traffic, low speeds, and more predictable conditions have become proving grounds for commercial autonomous vehicles.

These niche deployments are strategic footholds, enabling robust validation, public acceptance, and incremental scaling toward broader AV integration_

- In logistics hubs and industrial zones, companies like Einride and Nuro are operating driverless electric vehicles tailored for short-range freight movement. Einride’s autonomous electric trucks (AETs) navigate fenced-off areas, warehouse roads, and designated delivery lanes with remote supervision. Their low-speed operation simplifies perception and promotes better decision-making, enabling reliable deployment without the need for full general autonomy.

- Airports are a high-potential domain. By 2025, autonomous shuttles will operate at many international hubs for passenger transport between terminals and remote parking. These shuttles follow fixed routes, avoid public traffic, and run on predictable schedules, making them ideal for early AV deployment. Companies like Navya and Beep provide L4 autonomous systems often integrated with airport control for centralized monitoring.

- University campuses and retirement communities remain ideal for these initiatives due to their controlled environments, low-speed zones, repeatable patterns, and known users. Autonomous pods serve as last-mile shuttles, reducing car use and improving accessibility. Engineers can refine localization, V2X, and human-machine interaction, unaffected by urban chaos.

What are the Challenges for Self-Driving Cars?

On one hand self‑driving cars promise efficiency and safety.

On the other hand, they face hurdles in privacy, infrastructure, regulation, and technology before reaching widespread adoption:

- Traffic reduction benefits

- Self‑driving cars have the potential to reduce traffic congestion and improve fuel efficiency, a core motivation driving their development.

- Personal data privacy concerns

- There are growing concerns about personal data privacy in autonomous vehicles due to their extensive data collection practices.

- Growing volume of connected cars

- There will be approximately 367 million connected cars globally by 2027, primarily in Europe, the USA, and China, which will heighten both infrastructure demands and cybersecurity risks.

- An exploding market for car data

- The vehicle-generated data market is projected to be worth between $450 billion and $750 billion by 2030.

- Limited mapping and coverage

- Current mapping for self-driving cars is limited to specific test areas, making broader application challenging and underscoring the need for dynamic, realistic mapping.

Safety and Ethical Considerations

As driverless cars (AVs) become more integrated into public roads, a range of safety, ethical, and legal challenges must be addressed to ensure their responsible deployment and widespread acceptance.

- Public trust in the safety of automated vehicles is crucial for their widespread commercial adoption.

- Without public confidence, even the most advanced AV systems will struggle to gain market acceptance and regulatory approval.

- Incident reports involving autonomous vehicles, such as crashes, further erode public trust despite the potential for fewer accidents with automation.

- While AVs have the potential to reduce human error and traffic fatalities, each high-profile incident magnifies concerns and stalls adoption.

- Car manufacturers, OEMs, and suppliers bear the biggest responsibility for the safe operation of self-driving vehicles.

- As developers and integrators of the underlying technologies, these actors are at the forefront of ensuring that safety is not compromised during the design, production, or deployment phases.

- Legal and liability issues must be resolved regarding accountability in accidents involving autonomous vehicles.

- Determining who is at fault, whether it is software developers, car owners, or third-party vendors, remains a complex and unresolved legal challenge.

- The complex interactions between autonomous vehicles and human drivers present ethical dilemmas that must be considered in their deployment.

- From decision-making in unavoidable crash scenarios to prioritizing pedestrian safety, AVs must be programmed to navigate morally ambiguous situations in mixed-traffic environments.

- Cybersecurity and data privacy concerns are significant issues associated with the interconnected nature of autonomous vehicles.

- The constant exchange of data among AVs, infrastructure, and cloud systems creates vulnerabilities that bad actors could exploit.

- Developing systems that support cybersecurity for AVs is crucial to protect against malicious attacks that could compromise safety.

- Proactive investment in secure architectures and threat detection mechanisms is crucial for defending AVs against tampering, hacking, and surveillance risks.

What are the Future Trends of Self-Driving Cars?

The development of autonomous vehicles (AVs) is progressing steadily, as the algorithm learns from each driving scenario. Still, several technological, legal, and social factors will influence how and when these cars become mainstream.

- Most AVs currently available on the market have Level 2 and Level 3 automation.

- These systems can assist with steering, acceleration, and braking, but still require human supervision.

- Driver assistance systems currently eligible in some cars range from Level 2 automation to Level 3 automation.

- This incremental rollout is preparing drivers and regulators for the eventual transition to higher levels of autonomy.

- In the future, high levels of automation (Levels 4 and 5) are expected to hit the market soon.

- These systems would enable cars to operate without human intervention in many or all scenarios, reshaping how people travel and commute.

- Current mapping for self-driving cars is limited to specific test areas, making the broader application of this technology challenging.

- To achieve true scalability, AVs will need access to real-time, dynamic mapping across diverse geographic regions and traffic conditions.

- Governments will need to pass laws on vehicle autonomy and driverlessness to support AV technology.

- A regulatory framework is essential for standardizing safety expectations, assigning legal responsibility, and enabling deployment at scale.

- There are growing concerns about personal data privacy in autonomous vehicles, particularly regarding the collection of data.

- AVs rely on continuous data streams for navigation, safety, and performance monitoring, raising serious questions about user consent and data use.

- Autonomous vehicles cannot engage in the complex social interactions necessary for navigating traffic situations.

- For example, recognizing gestures, maintaining eye contact, or negotiating informal right-of-way with pedestrians and drivers remains a challenge.

- The adoption of autonomous vehicles may improve transportation options for marginalized communities.

- By offering mobility to people who are elderly, disabled, or underserved by public transport, AVs could help close gaps in accessibility.

- The COVID-19 pandemic negatively impacted the sales of connected cars, including autonomous vehicles, worldwide. Connected car sales in China declined 71% at the start of the COVID-19 pandemic.

- While the pandemic temporarily disrupted growth, it also emphasized the need for touchless, automated mobility solutions that AVs could provide in the long run.

Conclusion

In this article, we explored how machine learning enables self-driving cars to perceive, predict, and act without human input. We covered the core components: perception through sensor fusion and object recognition, prediction of other users’ behavior, planning safe trajectories, and translating them into control commands.

Various learning methods support continuous system improvement. We detailed key technologies, such as CNNs, as well as challenges, including rare edge cases, model verification, and hardware constraints.

Real-world applications span controlled test tracks, public road trials with safety oversight, and simulation environments using digital twins.

Finally, we examined niche deployments in airports, logistics hubs, and campuses, where limited complexity allows for reliable commercial use.

Throughout, we highlighted how automotive companies integrate these technologies to build autonomous platforms focused on awareness, adaptability, and ensuring safety.

FAQs

What machine learning technique is most used in autonomous vehicles for object detection?

Convolutional Neural Networks (CNNs) are the most widely used technique for object detection in autonomous vehicles, including self-driving cars. They analyze camera and LiDAR inputs to improve light detection, such as other cars, pedestrians, streetlights, and lane markings, with high spatial accuracy. CNNs are optimized for low latency and robustness under varying lighting and weather conditions.

What ML techniques are most critical for self-driving car perception?

CNNs are essential for visual data recognition tasks, such as lane and sign detection. Transformers and 3D vision models improve temporal consistency and depth reasoning. Scene understanding often uses spatial reasoning models and 3D semantic segmentation. Together, these systems turn raw sensor data into a coherent, real-time model of the environment.

How are real-time decisions achieved in AVs?

Real-time decisions are made by combining learned perception models with deterministic planning and control algorithms. The perception stack updates the scene ~10–30 times per second. Then, trajectory planners select safe paths based on predictions. Control systems translate these into steering, throttle, and brake commands, all under strict timing constraints.

How can autonomous vehicles help reduce traffic congestion in smart cities?

Autonomous cars react faster than humans and use V2V and V2I communication to improve decision-making. They smooth traffic, reduce braking, rebalance dynamically, and adapt without causing parking issues or erratic driving.

What challenges do AVs face in ensuring road safety?

Key challenges include handling rare and unpredictable scenarios (e.g., emergency cars, unusual pedestrian behavior), sensor failures, poor visibility, and adversarial conditions that ML algorithms must address. Ensuring reliability across diverse environments requires extensive simulation, realistic testing, perception redundancy, and formal safety validation of both software and hardware systems.

Will self-driving cars require a driver’s license or manual override in the future?

That depends on the jurisdiction and the level of autonomy. As of 2025, Level 4 vehicles still include manual overrides for human intervention in most regions. The complete removal of the steering wheel and driver responsibility (Level 5) will likely require new regulatory frameworks. It may be limited to specific areas, such as robotaxi zones or delivery fleets.