ML vs LLM: Key Differences, Applications & Engineering Impact

Language models like GPT are built on decades of progress in machine learning, specifically in a subfield called deep learning. But what exactly makes these systems different from earlier ML models? And why does it matter?

This article walks through the basics. How does Machine Learning work, what does Deep Learning add, and how do Large Language Models (LLMs) fit into the picture? Along the way, we’ll see how LLMs differ from traditional models in size, architecture, training, and capabilities.

Introduction. What is Machine Learning?

Machine Learning (ML) is a field of computer science and statistics focused on improving task performance through experience (data) instead of explicit programming. The ML algorithms run on standard hardware, from laptops to GPUs and TPUs. ML includes various approaches, from basic statistical methods to complex neural networks. ML systems generally:

- Process training data to find patterns

- Create models that represent these patterns

- Use models for predictions or decisions on new data.

Core Paradigms of Machine Learning Models

Supervised learning is a category of machine learning where the algorithm is trained on a dataset that includes inputs and their corresponding known outputs. Each training example is a pair: an input vector and its associated label or target value. The system learns to map inputs to outputs by minimizing the error between its predictions and the true labels. This approach is used to solve two main problems: classification and regression. In classification tasks, the goal is to assign inputs to one of several predefined categories: for example, identifying whether a financial transaction is legitimate Vs fraudulent or detecting objects in an image. In regression tasks, the objective is to estimate continuous values, such as forecasting electricity demand, predicting stock prices, or assessing the remaining useful life of an engine. The capability to deal with data and give regression outputs makes it possible for Machine Learning (and Deep Learning) to deal with advanced applications, such as applying machine learning to accelerate simulation.

Unsupervised learning works without answer keys. This is like exploring a city without a map, discovering it. The algorithm seeks natural relationships in unlabeled data. Recent advances have been impressive, with techniques enabling the algorithm to teach itself by comparing views of the same data. These systems can perform nearly as well as supervised ones, often needing only 1% of the previously required labeled examples!

Reinforcement learning takes a different approach. Instead of learning from examples, it learns through trial and error with feedback. Think of training a pet. If you reward good behaviors, the pet will learn what actions lead to treats. Reinforcement learning algorithms develop strategies to maximize rewards over time. While we've seen impressive results in games like chess and Go, the real challenges come in messy real-world situations: continuous movements (like robot arms), incomplete information, and situations where feedback is rare or delayed.

Algorithmic Foundations of Machine Learning Models

Machine Learning encompasses many algorithms and models, including traditional models like Naive Bayes and complex ones like neural network-based models. The shift from classical statistics to modern ML shows the evolution of modeling and computational power.

Linear models solve convex optimization tasks with guaranteed outcomes, while tree-based methods partition feature space using information gain to capture non-linear boundaries without gradients.

Support Vector Machines (SVMs) work by finding the optimal boundary between different data classes.

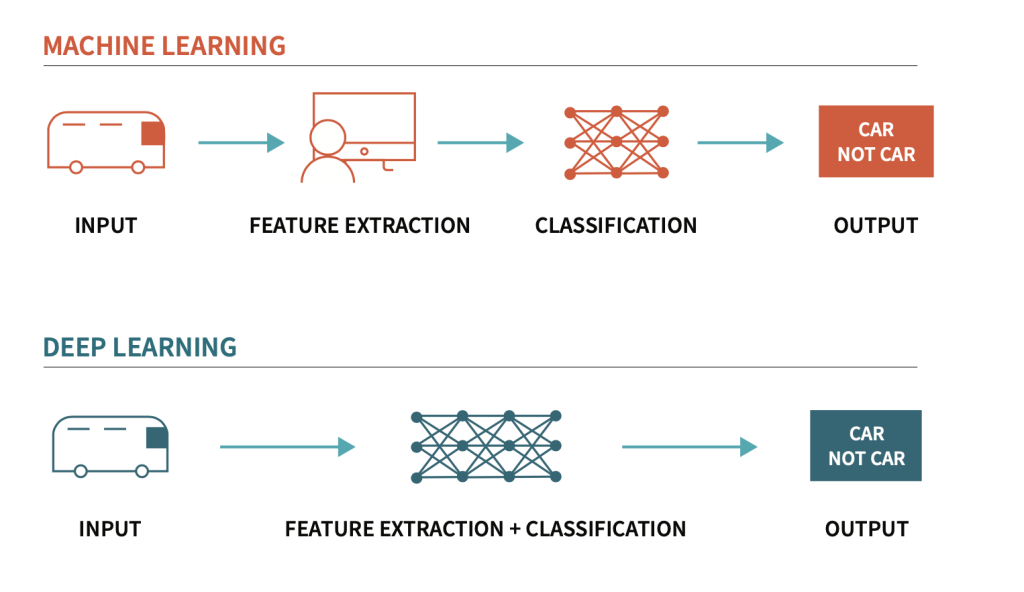

Deep Learning is based on deep neural networks that leverage hierarchical representations, with each layer transforming its inputs to increasingly abstract features. Their function approximation properties enable the modeling of complex relationships. Specialized neural architectures like 3D CNN (Convolutional Neural Networks) and attention mechanisms (dynamically weighing inputs) efficiently process textual data or images. These techniques improve computer vision and natural language processing, where machine performance now matches human abilities in specific areas.

What are Large Language Models?

LLMs can generate human-like text, conduct conversations, and summarize content. In exchange, they require vast and diverse datasets, huge compute resources, and careful fine-tuning to align outputs with intent.

Large Language Models (LLMs) are a subset of Machine Learning (ML) and Deep Learning.

Large Language Models (LLMs) are deep learning models built to understand and generate human language. Large Language Models (LLMs) excel in complex linguistic tasks like translation, question answering, and summarization.

LLMs represent a significant advancement in natural language processing. While traditional Natural Language Processing relied on hand-crafted features and task-specific architectures, LLMs employ self-supervised learning on vast text corpora to develop general-purpose language understanding capabilities.

LLMs use transformer architectures with self-attention mechanisms to track word relationships in long sequences, capturing context and meaning. Attention in deep learning represents a vector of significance weights. We utilize the attention vector to gauge its correlation with other elements to predict a pixel in an image or a word in a sentence. The sum of their values, adjusted by the attention vector, functions as the approximation for the target.

An LLM transforms input tokens:

x₁, x₂, …, xₜ₋₁

into a probability distribution over the next token xₜ:

P(xₜ | x₁, x₂, …, xₜ₋₁).

For example, if the input is “The pressure drops when the valve…”, the model breaks this into tokens like [“The”, “pressure”, “drops”, “when”, “the”, “valve”, …] and calculates the probabilities for the next token: P(“opens”) = 0.78, P(“fails”) = 0.15, and P(“heats”) = 0.07.

The LLM estimates these probabilities through statistical language modeling: it’s not reasoning about pressure or valves.

In reality, whether the pressure drops or rises when a valve opens depends on the pressure difference across it. But the model completes the sentence with what’s most statistically common in its training data.

That’s how LLMs generate text: by predicting one token at a time based on likelihood, not physical laws.

Hallucination and Confabulation in LLMs

LLM hallucinations occur when models generate false claims (e.g., stating "One pound of air weighs more than one pound of water") due to:

- Data gaps: Training on incomplete/biased corpora

- Overfitting: Prioritizing linguistic patterns over factual accuracy

- Prompt ambiguity: Unclear inputs triggering "best guess" outputs

Confabulations occur when LLMs generate plausible-sounding but factually incorrect responses. These errors can be subtle and misleading, especially in contexts where accuracy is critical. For example, imagine asking an LLM, “What is the capital of Australia?” The model might correctly answer “Canberra” in one instance but later confabulate by responding “Sydney”, a common misconception. Both answers sound plausible, but only one is accurate. This inconsistency highlights how LLMs reconstruct information based on statistical patterns rather than grounded knowledge.

Paradoxically, hallucinated outputs show higher narrativity than factual ones, mirroring human storytelling patterns. This suggests potential applications in creative writing, provided users recognize the outputs as fictional.

While LLMs represent a leap in NLP, the following remain largely unresolved:

- No physical experience to anchor common-sense reasoning

- Accuracy depends heavily on the training data scope

- Difficulty tracking long dialogue threads

Machine Learning Vs LLM - Key Differences

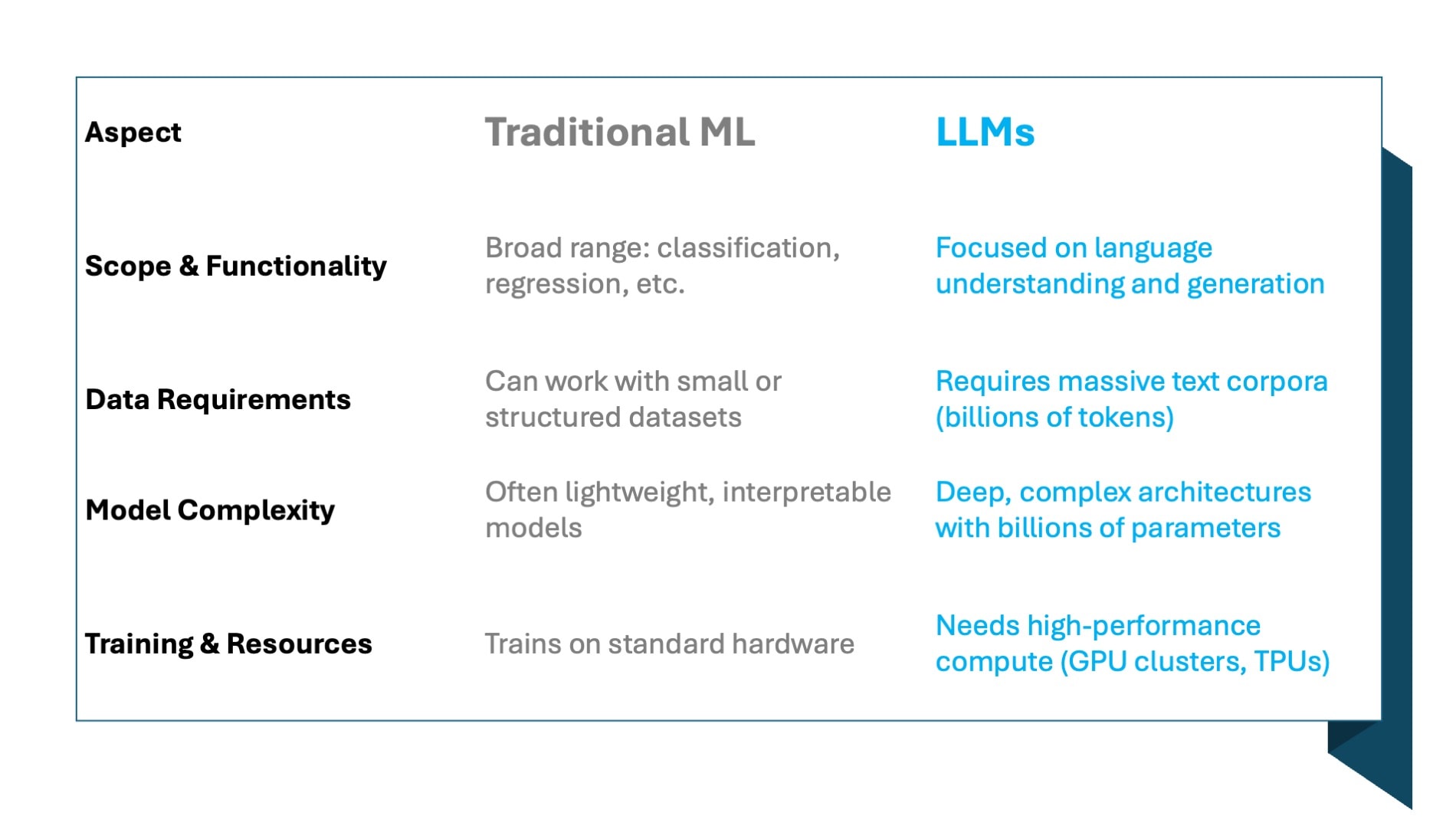

Machine Learning (ML) is a broad field used for classification, regression, clustering, and forecasting across many domains. LLMs are a specialized branch focused on understanding and generating human language.

Data Requirements, Model Complexity, Training, and Resources

Traditional ML can work with structured data or small datasets. On the other hand, LLMs require enormous volumes of text, billions or trillions of tokens, to learn linguistic patterns effectively.

Traditional ML models have long relied on feature extraction for applications across various industries.

The burden of complex feature extraction is shifted to financial costs when using LLMs.

LLMs use deep transformer architectures with billions of parameters. In contrast, many ML models, like decision trees or logistic regression, are more straightforward to interpret.

Training an LLM demands massive compute resources (GPUs, TPUs, distributed systems). Traditional ML models can often be trained on standard hardware with less time and cost.

The above considerations are summarized in the table.

Applications of Machine Learning and LLMs

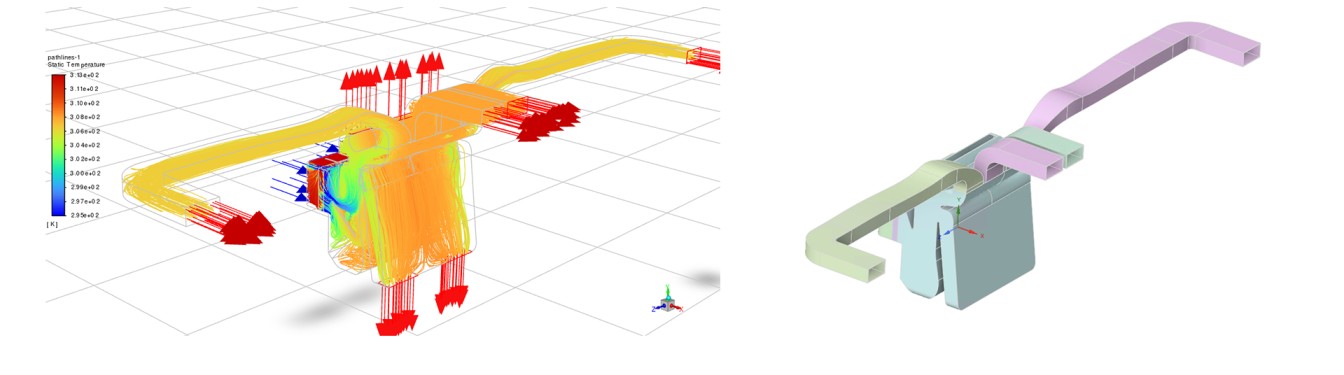

Machine Learning (ML) enables computers to learn from data patterns without explicit programming. By creating predictive models based on past results, ML reduces computation time in fields like engineering simulation, including CFD. It’s particularly beneficial when physical models are too costly or complex to solve directly. ML is versatile and applicable to classification, regression, and optimization. This explains the rise of applications of Machine Learning in engineering. However, ML often requires manual feature work: selecting and transforming the correct input variables to get good results. This step can be time-consuming and may limit scalability.

In finance, ML models flag suspicious transactions in real time by recognizing subtle deviations from normal behavior. In e-commerce and entertainment, recommendation engines learn user preferences to serve relevant products or content, improving engagement and conversion.

In healthcare, ML supports diagnosis by analyzing medical scans or lab data with a precision that complements clinical expertise. Logistics and supply chain operations use ML to anticipate demand fluctuations, detect bottlenecks, and improve inventory decisions.

ML also powers autonomous systems, enabling vehicles, drones, and robots to interpret sensor data, adapt to dynamic environments, and operate safely with minimal human input, thanks to Machine Learning optimization of the project parameters.

Applied LLMs and the Turing Test

Large Language Models (LLMs) have changed how we interact with technology. They produce outputs that often mirror human language with remarkable fidelity. Mention that LLMs are trained on vast amounts of text data from the internet, enabling them to understand and generate human-like text. This advancement has led to significant discussions about their capabilities, especially in the context of the Turing Test, a benchmark for machine intelligence.

LLMs like OpenAI’s GPT-4.5 can generate text that is not only coherent but also contextually relevant. For instance, GPT-4.5 is employed to draft articles, compose poetry, and engage in customer service interactions. Mention that Large Language Models can automate content creation processes, saving time and resources for businesses. Users have reported that the responses are often indistinguishable from those written by humans, noting the model’s adeptness at understanding nuanced prompts and delivering appropriate replies.

Also mention that the speed at which AI models respond, known as latency, is critical in user-facing applications.

LLMs are preferred for tasks requiring nuanced language understanding, like chatbots and text summarization.

However, this humanlike performance has also raised concerns.

Some users have expressed unease about the potential for LLMs to produce misleading information or to be used in creating deceptive content. The balance between utility and ethical considerations remains a critical area of ongoing discussion.

The Turing Test and LLMs Consciousness

The Turing Test examines if a machine can demonstrate human-like intelligence. It involves a human evaluator interacting through text with a person and a machine, unaware of "which is which". If the evaluator can't reliably distinguish the machine from the human based on conversation, the machine passes the test. The key is not giving correct answers but responding convincingly, human-like with language, reasoning, and conversational patterns that emulate a person. The test mimics intelligent behavior rather than proving understanding or consciousness.

In recent evaluations, GPT-4.5 was judged to be human 73% of the time, surpassing actual human participants in perception. This marks the first empirical evidence of an AI system passing a traditional three-party Turing Test.

These results have sparked debates about the implications of machines achieving such levels of human-like interaction. While some view it as a testament to technological progress, others caution against overestimating the depth of understanding these models possess, emphasizing that mimicking language does not equate to genuine comprehension or consciousness.

LLM advancements have transformed various sectors, offering tools that can generate human-like text with unprecedented accuracy. While these developments present exciting opportunities, they also necessitate careful consideration of ethical implications, particularly concerning the potential for misuse and the importance of maintaining transparency in AI-human interactions.

Integration in Engineering Workflows

ML, especially Deep Learning, can accelerate simulations, optimize designs, and predict physical behaviors from data. Thanks to a 3D Deep Learning approach, tools like Neural Concept apply this concept to 3D product geometries.

Meanwhile, LLMs can assist in automating documentation, interpreting results, generating code, and supporting decision-making through natural language interfaces.

Combining both offers an intriguing synergy: ML handles numerical tasks and design predictions, while LLMs enhance usability, explanation, and interaction. For example, engineers can query simulation results using natural language or receive design suggestions supported by fast ML-driven models. This duo reduces manual effort, speeds up iterations, and makes complex tools more accessible.

Future Trends and Conclusion

Future Trends - Generative AI

Generative AI refers to a broader category of AI systems capable of creating content such as text, images, and videos. It relies heavily on deep learning techniques, particularly Deep Neural Networks, which automate and enhance feature extraction from unstructured data. With AI data collection and generation, generative AI can help businesses automate content creation and achieve scalability without compromising quality.

Generative AI can be backed by "serious" engineering predictions, such as those from applying Machine Learning in CFD, as shown by the Neural Concept use cases.

Large Language Models (LLMs) specializing in language tasks are a key part of this generative trend. LLMs and generative AI have a foundation in neural networks, but their applications are expanding rapidly from creative tools and chatbots / virtual assistants to code generation and simulation. Companies employ LLMs to develop intelligent chatbots that enhance customer service by providing quick and accurate responses, since LLMs are advanced machine learning models specifically designed to process and generate human-like text.

We’re seeing a shift in the roles of ML and LLMs. Traditional Machine Learning models are often more efficient in structured data analysis, and ML continues to excel at structured prediction and decision-making tasks. LLMs and generative models will take on more complex, creative, and human-facing functions.

Conclusion

In conclusion, while LLMs are a subset of machine learning, they mark a distinct leap in scope, scale, and application.

Traditional ML includes a range of techniques, from supervised and unsupervised learning to reinforcement learning, optimized for structured data, interpretability, and domain-specific tasks such as classification or forecasting.

LLMs rely on massive datasets, deep transformer architectures, and enormous computational resources to model human language in a flexible, general-purpose way. Large Language Models require significant computational resources for training and deployment. They excel in tasks like translation, summarization, and dialogue, but their outputs are based on statistical likelihoods rather than factual grounding. This can result in convincing but inaccurate responses, especially when prompts are ambiguous or lacking data.

For engineers and technical users, ML remains a powerful tool for numerical modeling and decision-making. Traditional Machine Learning models are preferred for tasks requiring interpretability and computational efficiency.

At the same time, LLMs are best viewed as advanced linguistic interfaces that are functional for drafting, querying, or exploring ideas but are not infallible sources of truth!

As these technologies continue to grow explosively, understanding their differences will help you choose the right tool for the job and manage risks responsibly.

FAQ

How does domain expertise help in a machine learning pipeline?

It guides feature engineering and model design, improving accuracy, especially in structured data problems like binary classification tasks.

What makes deep learning solutions suitable for multiple tasks?

Their feature extraction capabilities allow them to handle structured data, text data, and even generative AI use cases from a single pre-trained model.

How is nuanced language understanding used in sentiment analysis models?

It helps capture nuanced linguistic patterns, which is crucial when analyzing text data from diverse datasets in natural language processing tasks.

Why are technical and financial considerations important in executing financial trading algorithms?

Because both the training data and model choice, like using deep learning for structured data, affect performance and risk in real-time scenarios.

What powers modern virtual assistants?

Pre-trained models trained on diverse datasets with strong language understanding, enabling them to manage tasks from answering queries to managing emergency response systems.