What Is Cloud Scalability? Benefits & Engineering Applications

Cloud environments are essential for delivering flexible, on-demand resources for computing to meet changing demand. The principle is to avoid upfront investment in computing power, storage, and services. But cloud computing services are no longer just hosting solutions. They are becoming a strategic platform supporting dynamic, data-intensive workloads.

Cloud scalability in cloud computing refers to environments that can handle growing workloads in cloud environments by adjusting resources.

Cloud scalability enables businesses to implement security measures to protect cloud data and applications from threats. Scalability in cloud computing can be expanded or contracted, helping organizations achieve cloud scalability without over-provisioning.

In a cloud computing system, this is typically done through vertical scaling (adding more processing power to existing machines) or horizontal scaling (adding more machines to the system). Cloud scalability enables both startups and enterprises to optimize performance and cost, and vertical and horizontal scaling are key strategies in building a robust, scalable cloud environment.

This article will explore cloud scalability, how a scalable cloud environment works, and why it matters for engineering applications. We will break down the concepts of vertical and horizontal scaling in cloud computing, look at how organizations achieve effective resource management and cloud scalability in practice, and highlight the key benefits of building a scalable cloud environment for modern workloads.

What is Cloud Computing Scalability?

Cloud computing scalability refers to the ability of a cloud environment to allocate or reduce resources based on demand dynamically. This can be done through vertical scaling (scaling up/down within a single node) or horizontal scaling (scaling out/in by adding/removing nodes).

Scalability in cloud computing ensures performance, availability, and cost-efficiency under changing workloads.

Industries today face volatile and growing demands (from traffic spikes in e-commerce to real-time processing in IoT). Achieving cloud scalability allows systems to remain responsive without overprovisioning.

This flexibility has at least three advantages:

- Reduces operational overhead

- Improves resource utilization

- Supports continuous delivery and disaster recovery

Cloud systems are becoming ever more complex. Scalability in cloud computing becomes non-negotiable to guarantee business continuity and customer satisfaction, while preventing performance degradation.

Scalability in cloud computing enables:

- On-demand provisioning for training large models

- Parallel execution of engineering simulations

- Cost-effective experimentation without upfront infrastructure

AI and engineering workloads like deep learning, digital twin simulations, and high-performance computing (HPC) often require sudden bursts of compute or memory. Cloud scalability makes it practical to run GPU-intensive tasks, manage large datasets, and test AI models at scale, especially when combining horizontal scaling in cloud computing for distributed training and vertical scaling for memory-heavy inference.

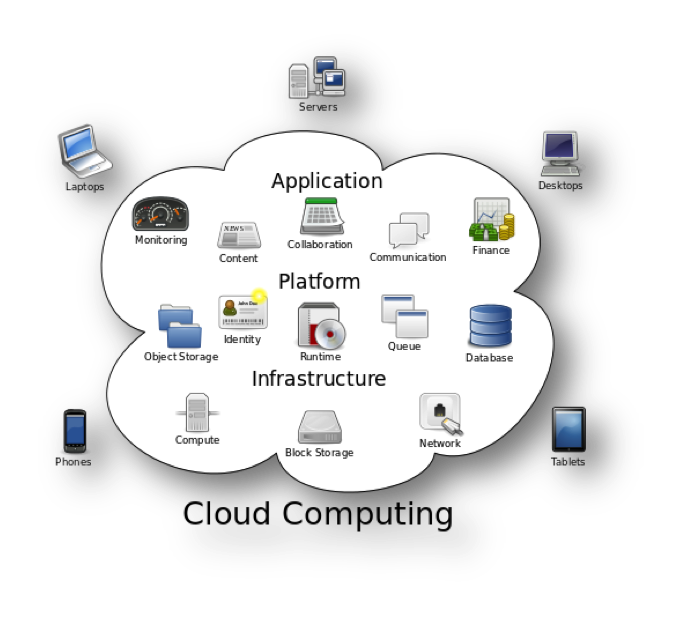

What Are the Main Cloud Computing Resources?

Cloud computing relies on three core resources: compute, storage, and networking.

Each of them plays a role.

- Compute. This is the processing power of virtual machines (VMs), containers, or serverless functions. Compute resources run applications, simulations, and services. They are measured in vCPUs, GPU cores, and memory (RAM), and can scale automatically based on workload.

- Storage. Includes data storage options from block storage for high-performance databases to object storage for backups. Cloud storage is elastic, redundant, accessible from anywhere, and often tiered by speed cost.

- Networking. This connects all components both internally and externally and includes virtual private networks (VPNs), load balancers, firewalls, and bandwidth provisioning. Reliable networking guarantees low-latency communication in data flow between services and users.

Key Principles of Cloud Computing Scalability

Scalability is a fundamental property of any robust cloud computing environment. It allows organizations to respond to fluctuating demand without downtime or waste, ultimately reducing cloud costs . The following principles highlight how cloud infrastructure supports seamless and intelligent scaling.

On-Demand Resource Allocation

Cloud infrastructure allocates compute, storage, and networking resources in real time based on current demand. This dynamic provisioning avoids overprovisioning and minimizes idle capacity. Autoscaling policies automatically monitor workloads and spin up or decommission resources, maintaining performance without manual intervention.

Cloud Elasticity vs. Scalability

Cloud elasticity refers to short-term, real-time expansion and contraction of resources, while cloud scalability is the system’s ability to grow sustainably over time. Both rely on a flexible cloud infrastructure, but scalability often includes planning for architectural evolution and performance under prolonged load.

The Role of AI in Intelligent Scaling

AI and machine learning models analyze usage patterns in a cloud computing environment to predict demand spikes and optimize resource distribution. These AI-driven scaling mechanisms reduce latency, lower costs, and prevent bottlenecks by fine-tuning allocation of resources in the cloud infrastructure.

Types of Cloud Scalability & How They Work

Cloud scalability strategies can be tailored to different workloads, enabling organizations to maintain optimal performance in diverse computing needs. The three main types are Vertical Scalability (Scaling Up), Horizontal Scalability (Scaling Out), and Diagonal Scalability (Hybrid Scaling).

Vertical Scalability (Scaling Up)

Vertical scaling involves enhancing the capabilities of an existing server by adding more resources like CPU processing power, memory, or storage capacity. It is often the fastest way to improve performance without altering system architecture. This approach is ideal when applications are not designed for distribution or need low-latency access to shared memory.

The best use cases are High-performance engineering simulations, where large models benefit from powerful single-node execution.

Horizontal Scalability (Scaling Out)

Horizontal scalability distributes workloads in servers or virtual machines. Instead of upgrading a single system, it replicates services across nodes that work in parallel. This is especially effective for stateless applications and parallelizable tasks.

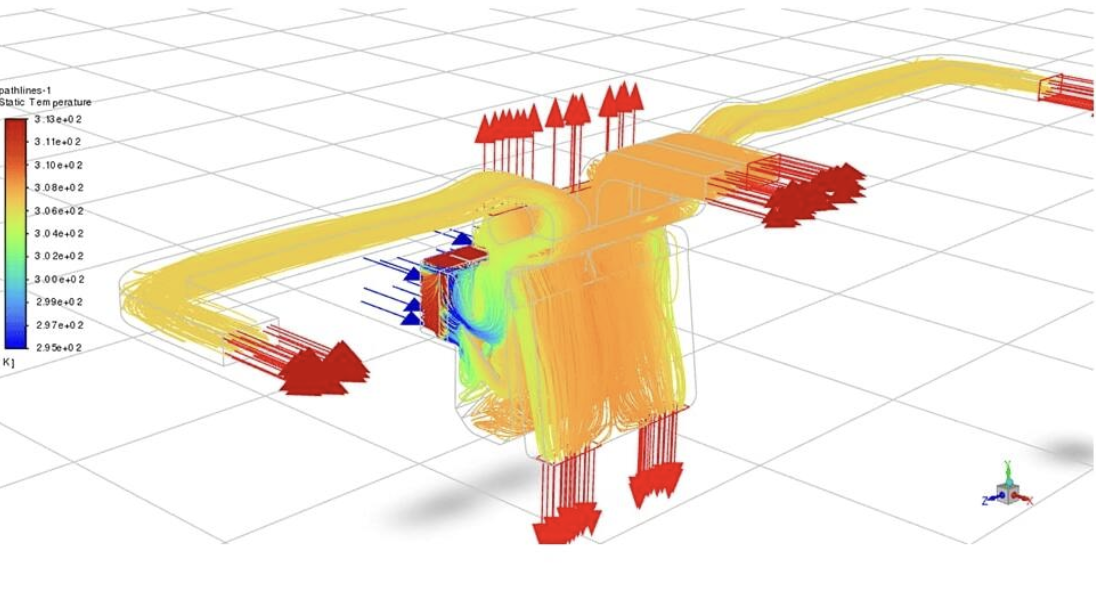

The best use cases are large-scale AI model training and CFD/FEA cloud-based simulations. It helps achieve scalability by reducing the load per server while increasing throughput.

Diagonal Scalability (Hybrid Scaling)

Diagonal scaling blends vertical and horizontal methods. It starts by scaling up an existing server until it reaches capacity and then scales out to multiple servers. This hybrid strategy, combining horizontal and vertical scaling, allows for stepwise growth while preserving performance and cost-efficiency.

The best use cases are AI-driven enterprise workflows that require burst compute with distributed training or inference. It ensures adaptability and optimal performance under dynamic demands.

Advantages & Benefits of Cloud Scalability

Cloud scalability benefits organizations operating in dynamic and compute-heavy environments. It supports real-time resource optimization while maintaining service continuity and performance.

Cost-Efficiency of Scalable Solutions

Scalable cloud environments reduce the risk of over-provisioning. You pay only for the compute, storage, and network resources. This dynamic allocation lowers the total cost of ownership and avoids idle infrastructure, contributing to significant cost savings.

Improved Performance

Resources can be adjusted in real time to meet changing workload demands. This minimizes bottlenecks, ensuring that compute-intensive tasks such as simulations or data processing run at peak.

Reliability & Disaster Recovery

Cloud-scalable architectures include automated failover and geographic redundancy. Workloads are redistributed to functioning instances if a node or region fails, minimizing downtime and data loss.

Flexibility for AI-Driven Engineering

AI workloads in various applications, such as predictive maintenance, (the keyword that the linked article is optimised for is "predictive maintenance ai". This means that the anchor has to include logically mentioning AI as a specific type of predictive maintenance, not just any type of predictive maintenance) can shift unpredictably. Cloud scalability allows teams to instantly provision GPU instances or distributed training environments, enabling rapid experimentation without infrastructure reconfiguration.

How Cloud Scalability Powers AI & Engineering Workflows

Scalability is the foundation of modern AI and engineering innovation in the cloud. Workloads grow in size and complexity: scalable infrastructure allows systems to expand or shrink automatically. This ensures optimal performance, cost-efficiency, and availability, critical for compute-intensive tasks like training Machine learning, allowing Machine Learning in CFD to accelerate design. (Actual keyword used for optimising the linked to article is "Machine Learning in CFD". This means that we have to mention CFD in our anchor to keep the relativity.)

Below are key ways cloud scalability offers enhancements to these workflows.

Cloud-Based AI Model Training

Before more details on the cloud infrastructure, it is essential to understand what "training" an AI model is. During training, a machine learning algorithms learn patterns from data to make predictions or decisions without being explicitly programmed. During training, the model:

- Processes input data through neural networks

- Makes predictions based on its current parameters

- Calculates error by comparing AI predictions to known correct answers

- Updates its parameters to minimize this error through optimization algorithms, like gradient descent

- Repeats this process thousands - millions of times over the entire dataset

This computationally intensive process requires processing vast datasets while performing mathematical operations, making computing power a bottleneck. A scalable cloud computing environment allows teams to spin up multiple GPU instances over different servers, then shut them down when training is complete.

This on-demand flexibility speeds up iteration, avoids bottlenecks, and reduces idle costs. Cloud scalability also ensures that models can be retrained frequently using growing datasets without re-architecting infrastructure. Organizations can dynamically scale resources horizontally (adding more machines) and vertically (using more powerful machines) based on specific training needs.

The pay-as-you-go model transforms large capital expenditures into operational expenses:

- A training that might take weeks on standard physical hardware can be completed in hours or days using 8-32 high-end GPUs

- Spot/preemptible instances offer discounts for interruptible workloads with proper checkpointing

- Resource monitoring and auto-scaling prevent wasted compute during experimentation phases

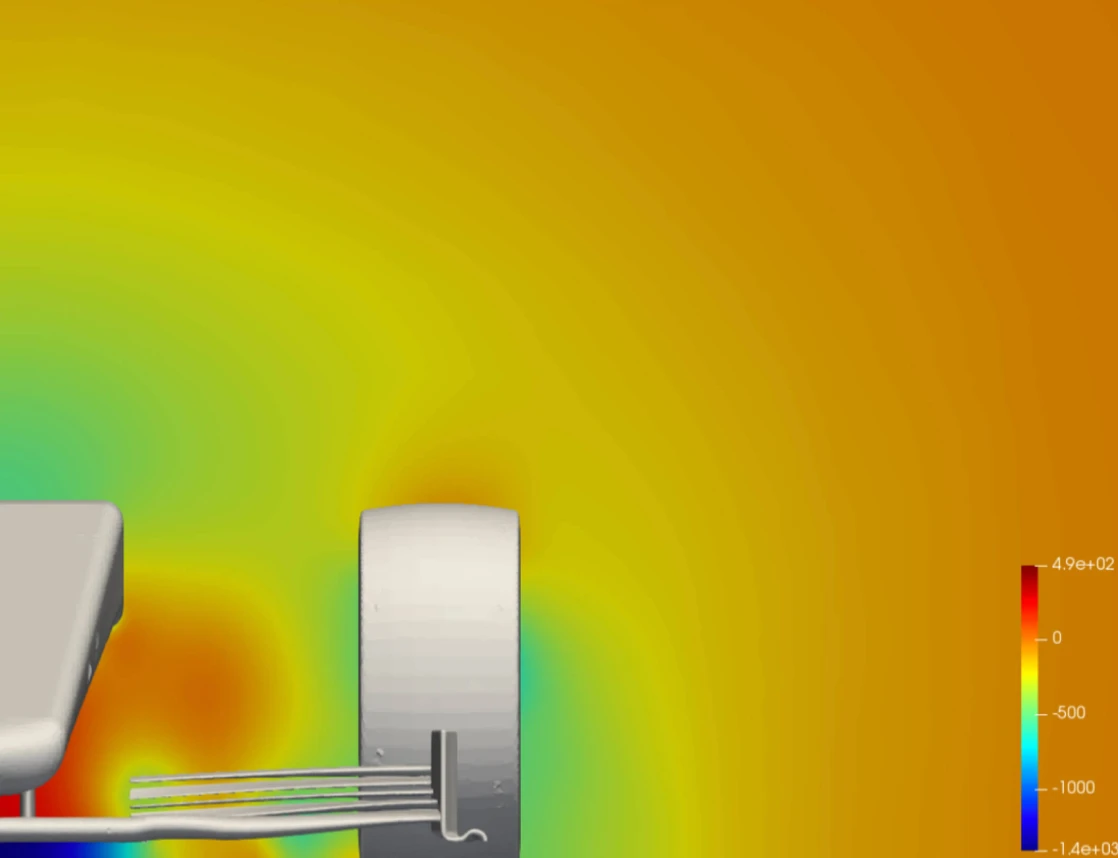

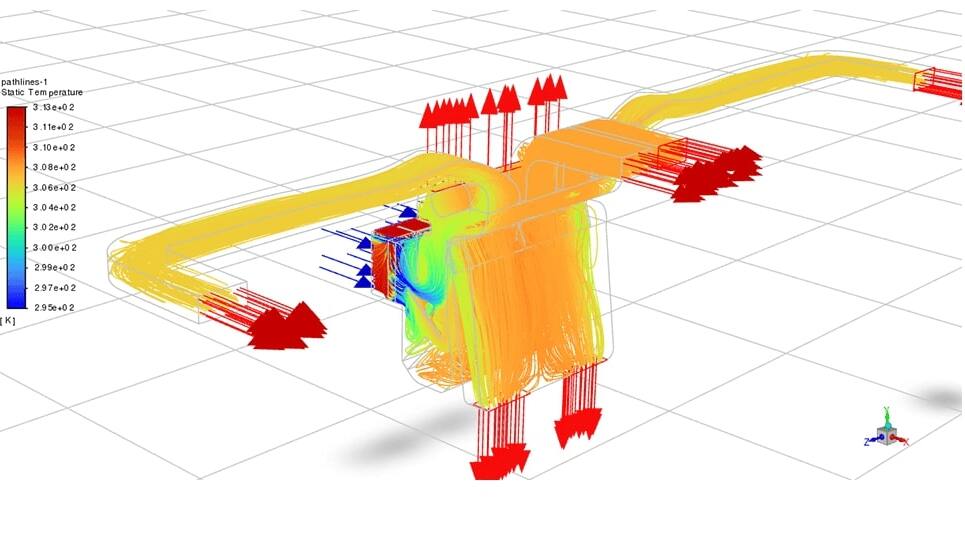

Real-Time Simulations & AI-Driven Design

Simulation workflows such as CFD or FEA require precise resource allocation. Scalable cloud infrastructure dynamically provisions compute and memory depending on the simulation phase. For example, meshing or solver stages might demand more cores or RAM, which horizontal or vertical scaling can provide. When real-time AI models are used for surrogate modeling or design optimization, scalability ensures that the entire process (simulation, inference, feedback) is uninterrupted, to helps engineers shorten development cycles and integrate AI-assisted design exploration directly into their design CAD tools thus supporting business growth.

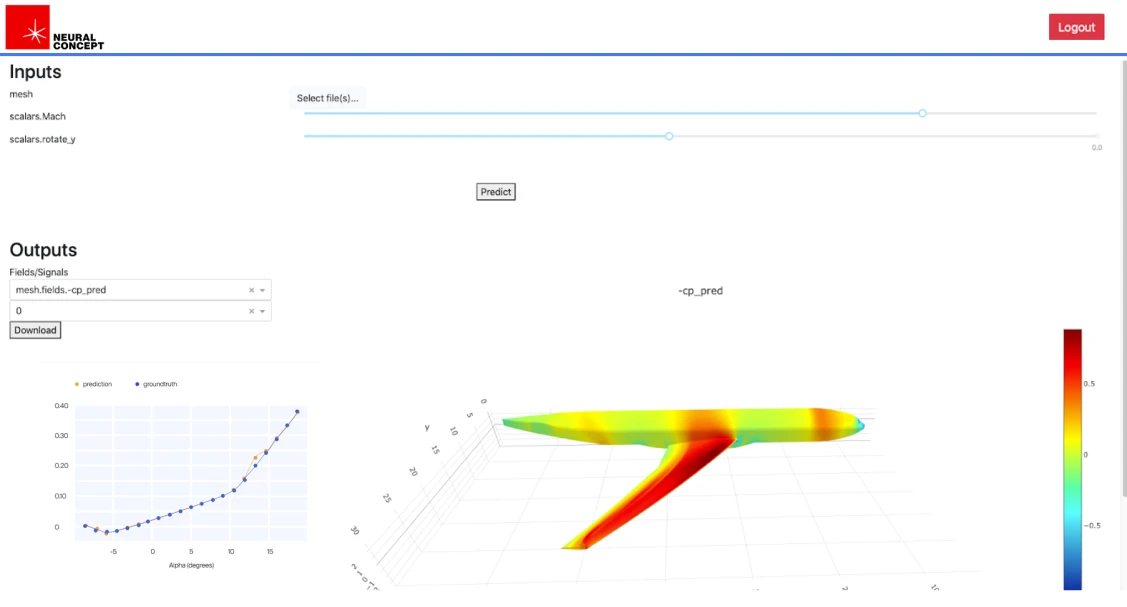

Neural Concept’s AI-Optimized Cloud Solutions

Neural Concept develops AI deep learning solutions (the actual keyword that the page is optimised for is "3D deep learning") for geometric Deep Learning, leading to CAE simulation acceleration. Their platform benefits directly from scalable cloud resources. It enables users to train and deploy 3D Deep Learning models over multiple cloud nodes. The platform intelligently distributes workloads to minimize latency and maximize hardware usage.

For industrial clients in automotive, aerospace and other industries, this means faster performance prediction, less reliance on traditional solvers, and support for agile design workflows.

Cloud scalability ensures Neural Concept’s services remain responsive, even as dataset size or model complexity grows.

Best Practices for Implementing Scalable Cloud Solutions

Scalable systems need to be designed, not improvised. Below are key practices to ensure stability and security as workloads grow.

Choose the Right Scalability Model

Matching the scalability model to the application ensures optimal performance without over-provisioning. Selecting between vertical and horizontal scaling depends on workload characteristics.

Vertical scaling increases capacity on an existing server, which is ideal for monolithic applications or simulations needing high single-node performance.

Horizontal scaling adds multiple servers to distribute load, which is best for stateless applications, web services, and parallel AI model training.

Finally, diagonal scaling combines both methods for complex environments to balance cost and performance.

Cloud infrastructure should be architected with autoscaling groups, load balancing, and monitoring tools to adapt dynamically.

AI-Driven Automation

AI algorithms analyze usage patterns and predict demand, enabling proactive resource allocation. This minimizes latency, avoids bottlenecks, and reduces costs. Intelligent scaling improves availability and system resilience.

Security & Compliance Considerations

Use encrypted communication, role-based access, and regular audits. Align autoscaling rules with compliance standards to prevent misconfigurations during scaling events.

Future Trends in Scalable Cloud Computing

As workloads evolve and demand for real-time performance increases, scalable cloud environments transform. The future lies in systems that grow elastically and adapt intelligently as demand increases . Below are key trends shaping the next generation of cloud scalability:

AI-Powered Auto-Scaling

Next-gen cloud platforms increasingly integrate machine learning models to predict usage patterns and allocate resources in real time. Unlike basic threshold-based scaling, AI-driven auto-scaling considers workload characteristics, user behavior, and time-based trends, leading to a self-optimizing infrastructure that minimizes costs while maintaining performance.

Edge Computing & Hybrid Clouds

Organizations are shifting toward hybrid and edge architectures as latency-sensitive applications grow, especially in robotics, autonomous systems, and industrial IoT. Scalability means orchestrating workloads across centralized cloud and distributed edge nodes, ensuring local responsiveness without losing the benefits of cloud elasticity. Balancing on-premises resources with public cloud scalability will become standard for high-performance applications .

Neural Concept’s Role in Future AI Scaling Solutions

Neural Concept, a Swiss pioneer in 3D deep learning for engineering, exemplifies how domain-specific AI models will drive new demands for scalable infrastructure. The Neural Concept tools, used for real-time design iteration and simulation, benefit from GPU-intensive scaling, especially horizontal scaling of inference tasks over cloud nodes. AI workflows like Neural Concept’s can grow in complexity. Cloud scalability evolves to handle AI-specific topologies and memory loads.

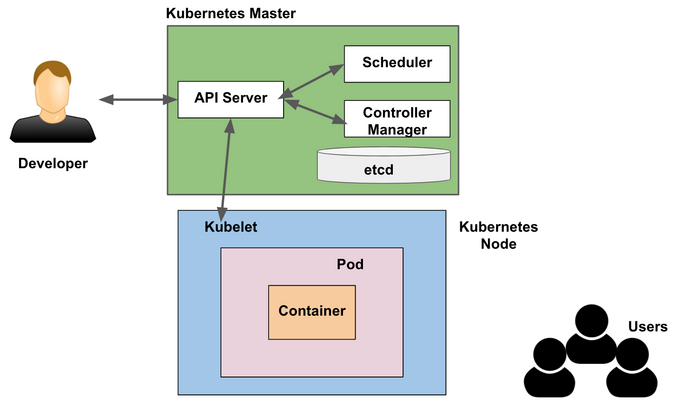

Container-Native Scaling with Kubernetes

Scalability is shifting to fine-grained orchestration at the container level. Tools like Kubernetes and KNative enable real-time autoscaling of individual microservices, enhancing API-driven and event-based architectures. Anticipate tighter integration between container runtimes, cloud platforms, and scaling policies.

Sustainability-Driven Scalability

With growing pressure to reduce carbon footprint, cloud scalability is becoming energy-aware. Future systems may scale not just based on load, but also based on data center energy mix, regional CO₂ intensity, or green compute availability. Expect scheduling algorithms that prioritize eco-efficient scaling decisions.

Cloud Computing Scalability: Conclusion

We have seen how cloud scalability is a "cornerstone" of computational infrastructure. It enables dynamic resource allocation in the compute, storage, and networking dimensions.

We have seen the vertical, horizontal, and diagonal scaling strategies. They provide architectural flexibility to match specific workload requirements while optimizing costs.

AI-driven workloads and engineering simulations increase complexity. Cloud scalability delivers the foundation for high-performance computing, without fixed infrastructure investments.

We have seen advanced technologies. Container orchestration, predictive auto-scaling, and hybrid edge deployments further enhance scalability.

All this helps organizations to maintain performance and reliability while adapting to evolving computational demands.

FAQ

What is a container?

A container is a portable software unit that bundles an application with its libraries, dependencies, and system tools. Unlike VMs, containers share the same host OS kernel, enabling faster starts, stops, and scaling. The container level mentioned in the article refers to managing individual software containers: lightweight, isolated units packaging an application and its dependencies.

What are the three main components of scalability?

Compute, storage, and networking. Together, these allow a cloud infrastructure to adjust processing power, data capacity, and data transfer capabilities in response to demand.

What is an example of scalability for consumers?

Streaming platforms like Netflix automatically scale servers during peak hours to handle millions of viewers without lag or service disruption.

Is cloud scalability essential for machine learning & AI development?

Yes. Training large models requires dynamic compute resources that cloud scalability provides, ensuring faster iteration and cost-effective experimentation.

What are the challenges associated with implementing cloud scalability?

Complex configuration, cost prediction, data synchronization in nodes, and ensuring application compatibility with scalable infrastructure.

How does cloud scalability contribute to disaster recovery strategies?

Allowing rapid resource reallocation and data replication over servers or regions minimizes downtime and data loss.

Can all applications benefit from cloud scalability?

Legacy systems or tightly coupled architectures may not scale well without redesign, so the answer is no - not all of them.