Engineering Experience in the Age of AI

By Pierre Baqué, CEO & Co-Founder, Neural Concept

Like in many other domains of technology and creativity, engineering is being shaken by the progress of AI. The ongoing adoption of AI tools for product development within leading engineering teams is only the beginning of a deep transformation that will accelerate exponentially.

The famous “paperclip singularity” thought experiment, proposed by Nick Bostrom in 2000, is actually materializing in a very singular way. Rather than an AI reinforcing itself in isolation and triggering an immediate leap in progress, we are witnessing a “human-in-the-loop” singularity. Humans, with clear and meaningful purposes, are collaborating with AI systems to build even more powerful AI algorithms, AI infrastructure and thus to transform the world.

Since engineering and design of physical products operate at the intersection of a structured data-rich digital world and a complex, imperfectly measured physical world, humans and AIs will need to collaborate for the foreseeable future. But how?

Everyday AI

It took me a while to find the right way to use AI effectively. At times, I felt I wasn’t tapping into its full potential; other times, I was taking a back seat, struggling to stay focused. This reminded me of Daniel Kahneman’s distinction between “System 1” and “System 2” thinking, where System 1 is fast, intuitive, and automatic, and System 2 is slow, deliberate, and analytical. Working with AI often feels like being drawn into System 1 for a task that clearly belongs to System 2. If we want to design systems and software that truly deliver the power of AI, the equation of human-AI efficiency clearly needs to be solved.

However, the quality of an experience cannot be measured only in terms of efficiency. It is also, and perhaps most importantly, about pleasure, enjoyment and self-fulfillment. Here as well, I think that the appearance of AI agents sets a new challenge. The feeling of being useful is a key element of satisfaction for humans, but also novelty and the impression of accomplishment.

In a recent article, Scott Alexander, a famous rationalist thinker, explores how the abundance of information and experiences, brought by the digital era and now magnified by generative AI, affects our ability to truly appreciate experiences, especially art. At the time Scott wrote this, the Genie 3 model, the first 3D generative real-time world model built by Google DeepMind, had not even been published yet, which raises these questions to a whole new level.

In his article, Scott concludes that the responsibility is on each of us to learn how to appreciate simple experiences.

Along the same lines, Turing Award and Nobel Prize recipient Herbert Simon, quoted in a recent article from The Economist about the “brainrot” phenomenon for his famous statement “What information consumes is rather obvious: it consumes the attention of its recipient”.

I believe we should take this a step further, because AI doesn’t simply assist us, it also absorbs our attention and makes us confront what we truly value.

I believe that, in an age of endless content, quantity dulls meaning. Value no longer lies in the content or the input we receive, but in the attention we give. It is our own responsibility and endeavour to be fully aware and in control of where we want to spend our attention and where we don’t. In a way, this puts humans back in the spotlight, who are now assigned a new task.

We need to question our usage, our tendency to let our lazy minds remain in a contemplative mode, without taking direct ownership of the experience or of the outcome — keeping us in System 1.

Engineering with AIs

These observations may guide us in defining principles for effectively using AI in engineering and product development. AI should be a tool that pushes humans to engage in deeper thinking, instead of adopting a passive approach.

We’ve seen this in practice. At Mahle, we helped engineers design their most silent blower-fan ever. Not by using AIs to deliver a “single best” design, but by letting them explore thousands of options and understand the trade-off behind each. At SP80, AI-driven exploration enabled a radical new boat design, now on its way to breaking a world record.

These examples show that AI is most powerful when it enhances human thinking and invites deeper reasoning — when it triggers what Kahneman would call our “System 2” thinking.

This is why, in the field of generative engineering, it is often better to use AI to perform highly reliable focused tasks, to create smaller components that humans can combine into larger assemblies or to generate data-driven insights, from which the human will draw learnings and use them to understand the impact of decision variables.

By contrast, when an AI delivers a single “90% right” design, the human designer first feels disempowered, and then discouraged upon realizing that correcting the flaws often requires more effort than starting from scratch.

When using AI physics surrogates for design – a class of AI systems that predicts quantitatively real world performances of physical systems – we observe that the most effective workflows involve generating and sorting many options and then providing tools for humans to dig deeper; conducting statistical analyses, understanding trade-offs, and measuring variable impacts. We have seen this working many times, allowing engineers to uncover and understand their disciplines better, sometimes questioning their experimental setup, or imagining new design concepts to go further.

This experience is vastly superior to simply running a fast simulation that reacts to design changes. In this case, the human re-engages with System 2 — but at a higher, more strategic level.

In engineering, 3D based AIs can also be used as a lever to generate, evaluate and rank thousands of solutions, based on a large number of criteria, something impossible for a human alone. This sheer force helps the engineers feel freer in their design paradigm, knowing that AI will be able to pull out the best of the concepts they create.

Product design and AI

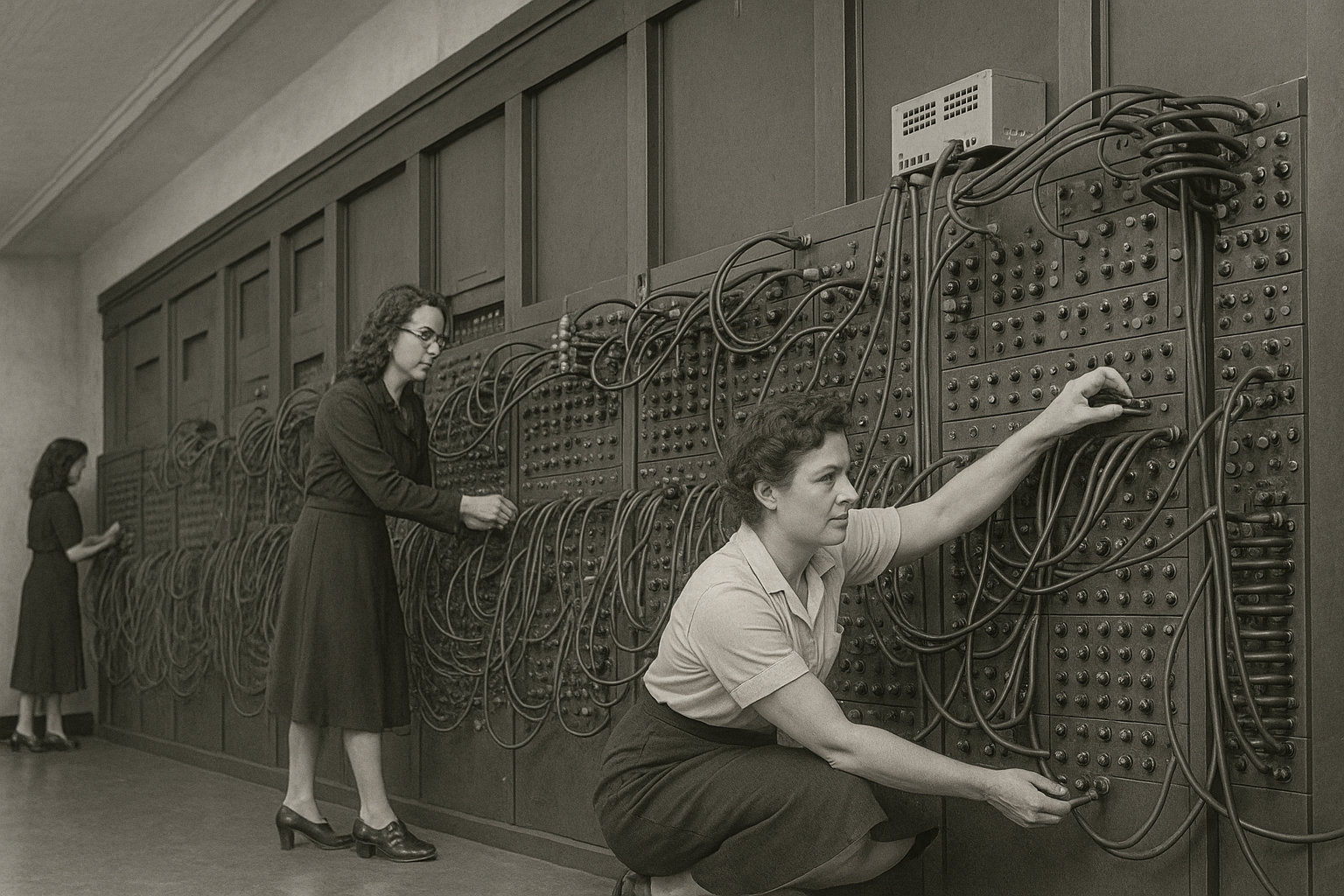

Over summer, I met with Prof. James Zou, from Stanford, who published experiments on a lab of virtual researchers. His work presents a promising avenue of science, reproducing even complex human sociological behaviours between AIs, and obtaining interesting practical results for his research.

{{highlight}}

I believe that the human-centricity of the work and the tasks expected from AI assistants in Engineering for the foreseeable future makes this paradigm not directly applicable to our field, where human context remains essential.

When designing AI tools for engineering, we should focus on building experiences that pull the best out of us, rather than tools that try to make us think less. This requires careful design and assessment, because the line between thinking more and not thinking at all is sometimes very thin. It depends on adjusting the user experience to the reliability and speed of every type of AI, as well as envisioning every AI intervention in the context of the broader task at hand.

This shift will impose a stricter selection on engineers: for instance, success will require the ability to think at the level of sets of AI-generated design options, rather than focusing only on individual solutions. The new generation of engineers will need to understand and acknowledge design options which go against common engineering intuition.

As a copilot to the engineer, AI will give us the opportunity to think and build at the system level, across multiple physics, rather than remaining obsessed with the details of a single design.

It also creates a real opportunity to make design, performance understanding, and manufacturing converge into one integrated process.

Building an AI-driven future

Engineering team leaders, in partnership with solution providers, have the difficult, but critical task to build workflows and associated engineering experiences, which force human engagement and forbid disengagement.

I am sometimes involved in conversations focused only on measuring quantitatively technical capabilities of AI systems – engineering software coding assistants, generative models, physics models, etc.– where we are losing sight of their role in an actual design chain, in the hands of an actual engineer. The reality is that, by treating AIs less as isolated, singular entities, thinking more about how they can engage engineers to think more deeply, we will extract more value from their capabilities. This might push organizations to adapt in their structure, rethink the role of their engineers and how they recruit – a conversation that most executives of manufacturing companies are entertaining anyway.

In the end, as in benchmarking, the real measure of progress is not how fast our AIs compute, but how deeply they help us understand. The medium-term future of engineering will belong to those who build systems that sharpen human attention, not replace it.

However, compared to humans, since AIs fail differently, respond at different speeds and face distinct limitations — I trust that the “virtual employee” paradigm would slow us down in our quest to find the right user experience and human-AI interaction patterns in engineering.